This is a post that serves mostly to try and seed Google with an answer to a question I had, that I couldn't find an existing answer to.

I was working on some macOS Swift code that needed to care about UNIX device nodes (ie block/character devices), which are represented via the dev_t type.

What I specifically needed was to be able to extract the major and minor node numbers from a dev_t, and that's what this does:

import Darwin

extension dev_t {

func major() -> Int32 {

return (self >> 24) & 0xff

}

func minor() -> Int32 {

return self & 0xffffff

}

var description: String {

let major = String(major(), radix: 16)

let minor = String(minor(), radix: 16)

return "\(major), \(minor)"

}

}

Swift 6 is great, but the strict concurrency checking can make interactions with older Apple APIs be... not fun.

Furthermore, older Apple APIs can be less aware of Swift's async features, which becomes particularly relevant if you have adopted actor objects, since access to those is always async.. I recently ran into a situation like this while adding a Finder "action extension" to an app I'm working on, where the code that does the "action" is in an actor.

Apple provides sample code for writing Finder extensions, but it assumes the actual work to be done, is synchronous. Since there wasn't a ton of relevant info online already, I figured I'd blog about it in the hopes that it can save some time for the next person who needs to do this.

Rather than make this blog post huge, I've just added lots of comments to the code so you can follow it in-place. The context is that we are writing an extension that can extract compressed archives (e.g. Zip files) and all of the actual code for interacting with archive files, is in an actor so the UI in our main app stays performant even with very large archives. You are invited to compare this code to Apple's sample code above, to see what my changes actually are.

//

// ActionRequestHandler.swift

// ExtractAction

//

// Created by Chris Jones on 22/05/2025.

//

import Foundation

import UniformTypeIdentifiers

import Synchronization

// This is a helper function that will instantiate our actor, call it with the url from Finder that

// we're extracting, and then return the URL we wrote the archive's contents to.

func extract(_ url: URL) async throws -> URL? {

// This first FileManager call is super weird, but it gives you a private, temporary

// directory to use to write your output files/folders to.

// It's super weird because we don't tell it anything about the action we're currently

// responding to, and the directory it creates is accessible only by our code - the user can't

// go into this directory, nor can root.

// Somehow macOS knows what we're doing and gives us an appropriate directory. I have put no

// effort into trying to understand that, it is what it is.

let itemReplacementDirectory = try FileManager.default.url(for: .itemReplacementDirectory,

in: .userDomainMask,

appropriateFor: URL(fileURLWithPath: NSHomeDirectory()),

create: true)

// Now add the name of the archive (the last path component of `url`) to our temporary

// directory, and create that directory. This is where we'll tell our actor to extract to

let outputFolderName = url.deletingPathExtension().lastPathComponent.deletingPathExtension

var outputFolderURL = itemReplacementDirectory.appendingPathComponent(outputFolderName)

try FileManager.default.createDirectory(at: outputFolderURL, withIntermediateDirectories: true)

// Now create our actor and ask it to extract the archive for us

let someActor = ArchiveExtractor(for: url)

await someActor.extract(to: outputFolderURL)

// Return our extraction directory so the handler below can tell Finder about it.

// Finder will then take care of moving it to the directory the user is in, renaming it

// if any duplicates exist, and offering the user the opportunity to change the name.

return outputFolderURL

}

// This is the class that implements the Finder extension

class ActionRequestHandler: NSObject, NSExtensionRequestHandling {

func beginRequest(with context: NSExtensionContext) {

NSLog("beginRequest(): Starting up...")

// Get the input item from Finder

guard let inputItem = context.inputItems.first as? NSExtensionItem else {

preconditionFailure("beginRequest(): Expected an extension item")

}

// Get the "attachments" from the input item. These are NSItemProviders representing the

// file(s) selected by the user

guard let inputAttachments = inputItem.attachments else {

preconditionFailure("beginRequest(): Expected a valid array of attachments")

}

precondition(inputAttachments.isEmpty == false, "beginRequest(): Expected at least one attachment")

// Similar to Finder giving us NSItemProvider objects for our input files, we also have to create

// NSItemProvider objects for our output files.

// Below we will do that through a complex arrangement of two callback methods which do not

// guarantee that they are called on the main thread. Because of that lack of guarantee, we need

// to ensure we are thread-safe, which we will do be creating a Mutex-wrapped array to store our

// output NSItemProviders in.

let outputAttachmentsStore: Mutex<[NSItemProvider]> = Mutex([])

// This is how we will schedule our final work to be done after all of our output attachments

// have finished doing their callback closures. More on this later.

let dispatchGroup = DispatchGroup()

// Iterate the incoming NSItemProviders

for attachment in inputAttachments {

// Tell the dispatch group that we're starting a new piece of work

// (you can also think of this as incrementing a reference counter)

dispatchGroup.enter()

// Before we can call loadInPlaceFileRepresentation below, we need to know what UTType it

// should load. Usually you'll already know what this is because you operate on one type

// of file.

// In my case, I need to operate on multiple UTTypes, so rather than repeat all this code

// for ~20 types of archive, I just grab the UTType of the incoming NSItemProvider and

// use that to load the FileRepresentation

guard let attachmentTypeID = attachment.registeredTypeIdentifiers.first else { continue }

NSLog("beginRequest(): Discovered source type identifier: \(attachmentTypeID)")

// This uses the input NSItemProvider to locate the file it relates to and pass it to our closure.

// The closure's job is to create an NSItemProvider that can be handed back to Finder, and that provider

// is responsible for producing our output file.

_ = attachment.loadInPlaceFileRepresentation(forTypeIdentifier: attachmentTypeID) { (url, inPlace, error) in

// Once we have finished creating the NSItemProvider, signal to the DispatchGroup

// that we've finished a piece of work (ie decrement the reference counter)

defer { dispatchGroup.leave() }

guard let url = url else {

NSLog("beginRequest(): Unable to get URL for attachment: \(error?.localizedDescription ?? "UNKNOWN ERROR")")

return

}

NSLog("beginRequest(): Found URL: \(url)")

// Enter a context where we have safe access to the contents of our Mutex

outputAttachmentsStore.withLock { outputAttachments in

let itemProvider = NSItemProvider()

// The loadHandler closure for this output NSItemProvider is where we'll call our

// helper function from earlier.

NSLog("beginRequest(): Registering file representation...")

itemProvider.registerFileRepresentation(forTypeIdentifier: UTType.data.identifier,

fileOptions: [.openInPlace],

visibility: .all,

loadHandler: { completionHandler in

NSLog("beginRequest(): in registerFileRepresentation loadHandler closure")

// We can't just directly await our extract() helper function here because we're in a

// syncronous context, not an async one, so we need a Task, but if we just ask for a Task

// on our current thread, it will never execute because the thread will be blocked waiting for it.

// So, we will ask for a detached, ie background, Task to do our work and call the completion handler.

Task.detached {

NSLog("beginRequest(): in Task")

do {

// Now we're in a Task we have an async context, so we can finally call our

// extraction helper function from above

let writtenURL = try await extract(url)

completionHandler(writtenURL, false, nil)

} catch {

completionHandler(nil, false, error)

}

}

return nil

})

// Store the output NSItemProvider in our thread-safe storage.

// Note that at this point, the callback above hasn't actually run yet, and doesn't run until

// Finder is ready to call it at some point in the future.

outputAttachments.append(itemProvider)

NSLog("beginRequest(): Adding provider output, there are now \(outputAttachments.count) providers")

}

}

}

// This is the second piece of the DispatchGroup magic.

// This schedules a closure to run on the main queue when the DispatchGroup

// has been drained of tasks (ie the reference counter has reached zero).

// We can't do something like dispatchGroup.wait() because that would block the queue

// and prevent the various NSItemProvider callbacks from executing.

// Instead, the main queue of this extension's process just keeps ticking along

// until the final call to dispatchGroup.leave().

// This means you can deadlock the process if that never happens, but that

// should only happen if your actor gets stuck indefinitely.

dispatchGroup.notify(queue: DispatchQueue.main) {

NSLog("beginRequest(): DispatchGroup completed")

// This is the thing we have to return to Finder, and it will contain attachments for all of the

// NSItemProviders we want to have exist.

let outputItem = NSExtensionItem()

let result = outputAttachmentsStore.withLock { (outputAttachments: inout sending [NSItemProvider]) -> [NSItemProvider] in

// Action Extensions have an interesting quirk - if you don't return all of the input files

// Finder will assume you've transformed them and will delete them. We don't want that, so we

// will check we're not going to miss any.

if inputAttachments.count < outputAttachments.count {

NSLog("beginRequest(): Did not find enough output attachments")

return []

}

// We can't return `outputAttachments` because it's isolated by the Mutex, but we know no further

// changes will happen at this point, and NSItemProvider conforms to NSCopying,

// so we can just return an array of copies that is free of any isolation issues.

return outputAttachments.compactMap { $0.copy() as? NSItemProvider }

}

if result.isEmpty {

context.cancelRequest(withError: ArkyveError(.extract, msg: "Unable to extract archive"))

return

}

// As mentioned above, we want to tell Finder to not delete all of the input files

// So we return those NSItemProviders as well as the ones we created.

outputItem.attachments = inputAttachments + result

NSLog("beginRequest(): Success, calling completion handler")

context.completeRequest(returningItems: [outputItem], completionHandler: nil)

}

}

}

All of my smart home stuff is running in Home Assistant, and I'm using their OS on a Mini PC.

One of the things I'm running on that OS is the Scrypted Add-On to bridge my generic RTSP cameras into HomeKit, and to use Scrypted's NVR.

By default, Scrypted will be storing NVR recordings in HassOS' data partition. I wanted to make sure that even if the NVR goes wild and fills the disk, the rest of my Home Assistant system wouldn't have to deal with running out of disk space, so I started looking for a way to configure HassOS and/or Scrypted to store the NVR recordings elsewhere. Turns out that isn't directly possible, but there is a very useful feature of HassOS that makes it possible.

Specifically, that feature is that you can import udev rules. With that I can ensure that a dedicated partition can be mounted in the location that the NVR recordings will be written, and now even if Scrypted goes haywire and runs that partition out of space, the rest of the system will function normally.

The official documentation for how to import udev rules (amongst many other useful things) is here, but the approximate set of steps is:

- Arrange for there to be a partition available on your Home Assistant OS machine, formatted as

ext4, with the label NVR. I put a second disk in, so it's completely separate from the boot disk which Home Assistant OS may choose to modify later.

- Format a USB stick as FAT32, named

CONFIG

- Create a directory on that stick with the name

udev

- In that

udev folder create a plain text file called 80-mount-scrypted-nvr-volume.rules

- Place the udev rules below in that file

- Put the USB stick in your Home Assistant OS machine and, from a terminal, run

ha os import

The contents of that rules file should be:

# This will mount a partition with the label "NVR" to: /mnt/data/supervisor/addons/data/09e60fb6_scrypted/scrypted_nvr/

# Import partition info into environment variables

IMPORT{program}="/usr/sbin/blkid -o udev -p %N"

# Exit if the partition is not a filesystem

ENV{ID_FS_USAGE}!="filesystem", GOTO="abort_rule"

# Exit if the partition isn't for NVR data

ENV{ID_FS_LABEL}!="NVR", GOTO="abort_rule"

# Store the mountpoint

ENV{mount_point}="/mnt/data/supervisor/addons/data/09e60fb6_scrypted/scrypted_nvr/"

# Mount the device on 'add' action (e.g. it was just connected to USB)

ACTION=="add", RUN{program}+="/usr/bin/mkdir -p %E{mount_point}", RUN{program}+="/usr/bin/systemd-mount --no-block --automount=no --collect $devnode %E{mount_point}"

# Umount the device on 'remove' action (a.k.a unplug or eject the USB drive)

ACTION=="remove", ENV{dir_name}!="", RUN{program}+="/usr/bin/systemd-umount %E{mount_point}"

# Exit

LABEL="abort_rule"

It's likely a good idea to reboot the Home Assistant OS machine at this point.

Now you can go to Scrypted's web UI, into the Scrypted NVR plugin and configure it to use /data/scrypted_nvr as its NVR Recordings Directory. The Available Storage box there will show the correct free space for the new volume.

And that's it!

Notes:

- As far as I know, the mountpoint for the Scrypted add-on should be stable, but I can't promise this.

- This should be very safe as it will ignore any partition that isn't labelled

NVR.

- This should work with removable disks (e.g. USB), however, the Scrypted addon will not be stopped if you unplug the disk, so I would strongly recommend not doing that without stopping Scrypted first.

I run most of my home services in Docker, and I decided it was time to migrate Tailscale from the host into Docker too.

This turned out to be an interesting journey, but I figured I'd talk about it here for anyone else hitting the same issues.

Here is my resulting Docker compose yaml:

tailscale:

hostname: tailscale

image: tailscale/tailscale:latest

restart: unless-stopped

network_mode: "host" # Easy mode

privileged: true # I'm only about 80% sure this is required

volumes:

- /srv/ssdtank/docker/tailscale/data:/var/lib # tailscale/tailscale.state in here is where our authkey lives

- /dev/net/tun:/dev/net/tun

- /var/run/dbus/system_bus_socket:/var/run/dbus/system_bus_socket # This seems kinda terrible, but the daemon complains a lot if it can't connect to this

cap_add: # Required

- NET_ADMIN

- NET_RAW

environment:

TS_HOSTNAME: "lolserver"

TS_STATE_DIR: "/var/lib/tailscale" # This gives us a persistent entry in TS Machines, rather than Epehmeral

TS_USERSPACE: false # Bizarrely, even if you bind /dev/net/tun in, you still need to tell the image to not use userspace networking

TS_AUTH_ONCE: false # If you have a config error somewhere, and this is set to true, it'll be really hard to figure it out

TS_ACCEPT_DNS: false # I don't want TS pushing any DNS to me.

TS_ROUTES: "10.0.88.0/24,10.0.91.0/24" # Docs say this is for accepting routes. Code says it's for advertising routes. Awesome.

TS_EXTRA_ARGS: "--advertise-exit-node"

labels:

com.centurylinklabs.watchtower.enable: "true"

Important things to note are:

TS_STATE_DIR is useful if you want a persistent node rather than an Ephemeral one (I'm not running this as part of some app deployment, this is LAN infrastructure)TS_USERSPACE shouldn't just always default to true, it should check if /dev/net/tun is available, but it doesn't, so you have to force it to false if you want kernel networking.TS_AUTH_ONCE is great, but if you have an error in the lower level networking setup, having this set to true will hide it on restarts of the container. I suggest keeping this false.TS_ROUTES is currently wrong in the documentation. It is described as being for accepting routes from other hosts, but it's actually for advertising routes to other hosts.

Introduction

Hammerspoon now has per-commit development builds generated automatically by a GitHub Actions workflow.

This was a surprisingly slow and painful process to set up, so here are some things I learned along the way.

I prefer scripts to actions

There are tons of third party GitHub Actions available in their marketplace. Almost every time I use one, I come to regret it and end up switching to just running a bash script.

More useful checkouts

If you want to do anything other than interact with the current code (e.g. access tag history) you'll find it fails. Add the fetch-depth argument to actions/checkout:

- name: Checkout foo

uses: actions/checkout@v2

with:

fetch-depth:0

Checking out a private repo from a public one is weirdly hard

Since these development builds are signed, they need access to a signing key. GitHub has a system for sharing secrets with a repo, but it's limited to 64KB values. For anything else, you need to encrypt the secrets in a repo and set a repository secret with the passphrase.

It seemed to me like it would be a good idea to keep the encrypted secrets in a private repository that the build process would check out, so the ciphertext is never exposed to the gaze of the Internet.

Unfortuantely, GitHub's OAuth scopes only allow you to give full read/write permission to all repositories a user can access, there's no way to grant read-only access.

So, I decided it was safer to just try and be extra-careful about how I encrypt my secrets, and keep them in a public repository.

Code signing a macOS app in CI needs a custom keychain

The default login keychain requires a password to unlock, so if you put a signing certificate there, your CI builds will hang indefinitely waiting for a password to be entered into a UI dialog you can't see.

I took some ideas from the devbotsxyz action and a couple of blog posts, to come up with my own script to create a keychain, unlock it, import the signing certificate, disable the keychain's lock timeout, and allow codesigning tools to use the keychain without a password.

Xcode scrubs the inherited environment

Update: This is not actually true. When I wrote this item, I had forgotten that our build system included a Makefile and it's make not Xcode that was scrubbing the environment.

Normally, you can use environment variables like $GITHUB_ACTIONS to determine if you're running in a CI-style situation. I use this for our test framework to detect CI so certain tests can be skipped.

Unfortunately, it seems like xcodebuild scrubs the environment when running script build phases, so instead I created an empty file on disk that the build scripts could check for:

- name: Workaround xcodebuild scrubbing environment

run: touch ../is_github_actions

This allows us to skip things like uploading debug symbols to Sentry.

You can't upload artifacts from strange paths

The actions/upload-artifact action will refuse to upload any artifacts that have ../ or ./ in their path. I assume this is for security reasons, but that makes no sense because all you have to do is move/copy the file you want into the runner's $PWD and you can upload them:

- name: Prepare artifacts

run: mv ../archive/ ./

- name: Upload artifact

uses: actions/upload-artifact@v2

with:

name: foo

path: archive/foo

It's pretty easy to verify your code signature, Gatekeeper acceptance, entitlements and notarization status

For Hammerspoon these are part of a more complex release script library, but in essence these are the commands that you can use to either check return codes, or outputs, for whether your app is as signed/notarized/entitled as you expect it to be:

# Check valid code signature

if ! codesign --verify --verbose=4 "/path/to/Foo.app" ; then

echo "FAILED: Code signature check"

fi

# Check valid code signing entity

MY_KNOWN_GOOD_ENTITY="Authority=Developer ID Application: Jonny Appleseed (ABC123ABC)"

ACTUAL_SIGNER=$(codesign --display --verbose=4 "/path/to/Foo.app" 2>&1 | grep ^Authority | head -1)

if [ "${ACTUAL_SIGNER}" != "${MY_KNOWN_GOOD_ENTITY}" ]; then

echo "FAILED: Code signing authority"

fi

# Check Gatekeeper acceptance

if ! spctl --verbose=4 --assess --type execute "/path/to/Foo.app" ; then

echo "FAILED: Gatekeeper acceptance"

fi

# Check Entitlements match

EXPECTED=$(cat /path/to/source/Foo.entitlements)

ACTUAL=$(codesign --display --entitlements :- "/path/to/Foo.app")

if [ "${ACTUAL}" != "${EXPECTED}" ]; then

echo "FAILED: Entitlements"

fi

I do these even on local release builds, to ensure nothing was missed before pushing out a release, but they also make sense to do in CI.

That's it

Not a ground-shaking set of things to learn, but combined they took several hours to figure out, so maybe this post saves someone else some time.

(If you don't want to read this whole thing, skip to the end of the post for a tl;dr version)

I was lucky enough to get a Labists X1 3D printer for Christmas a few weeks ago, and it's the first 3D printer I've had or really even interacted with.

It's been a fascinating journey so far, learning about how to calibrate a printer, how to use slicers, and how to start making my own models.

Something that became obvious fairly quickly though, was that the printer would be more reliable with a heated bed. I've been able to get reliable prints via the use of rafts, but that adds time to prints and wastes filament, so I decided to see if I could mod the printer to have a heated bed.

I started Googling and quickly discovered that my printer is actually a rebadged EasyThreed X1 and that EasyThreed sell a hotbed accessory for the X1, but it's an externally powered/controlled device. That's fine in theory, but I have quickly gotten very attached to being able to completely remotely control the printer via Octoprint. So, the obvious next step was to try and mod the printer to be able to drive the heater directly.

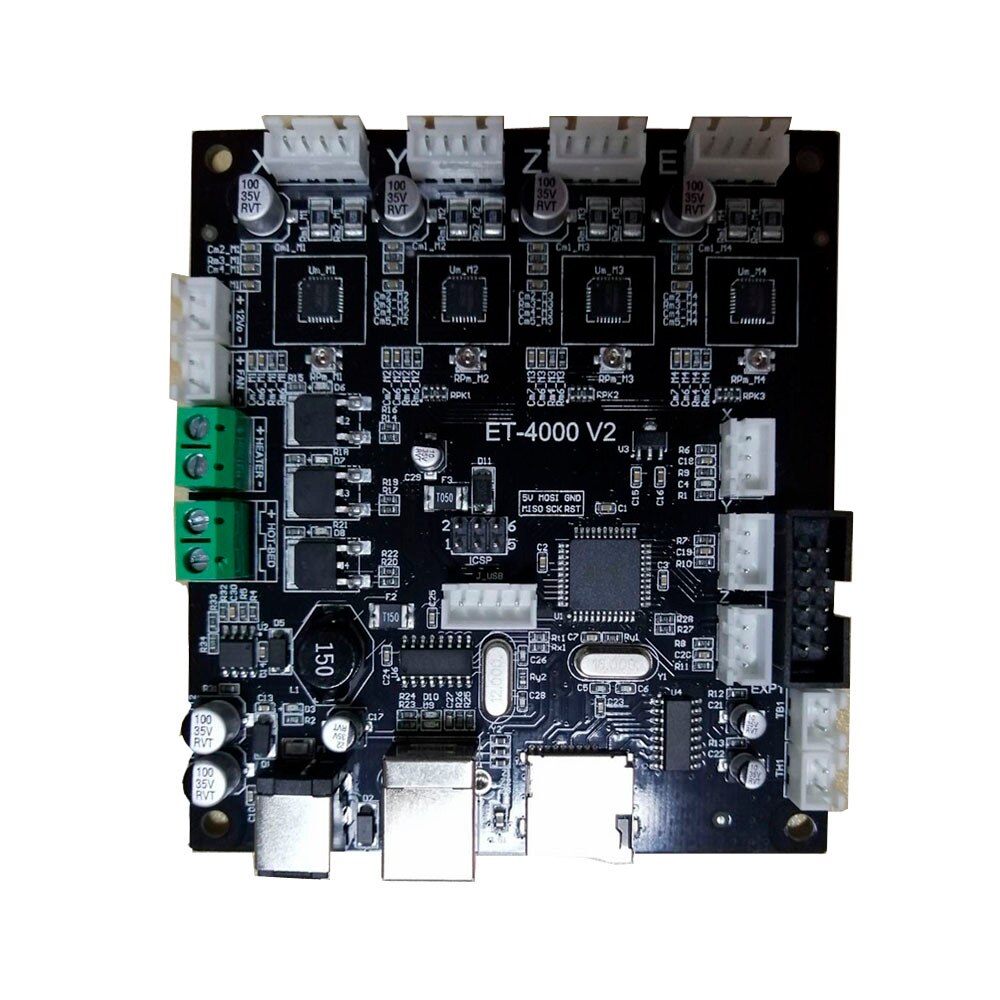

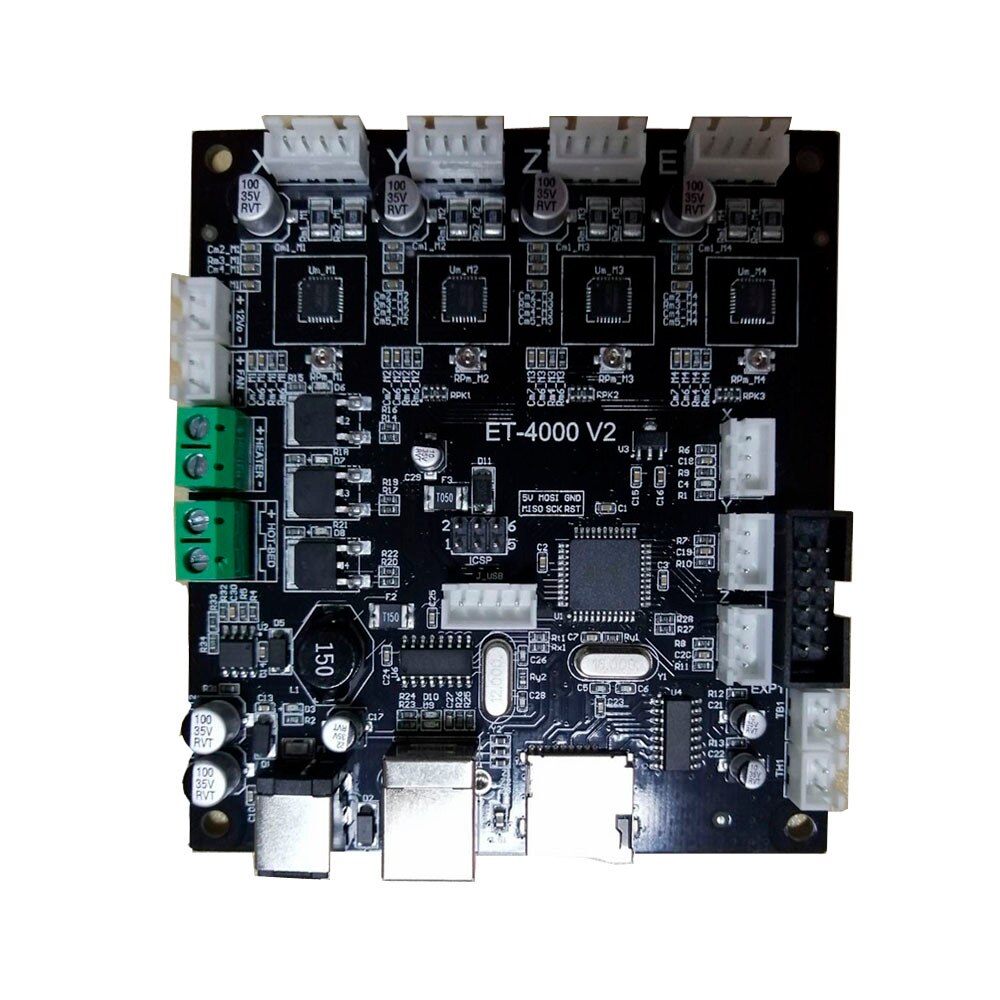

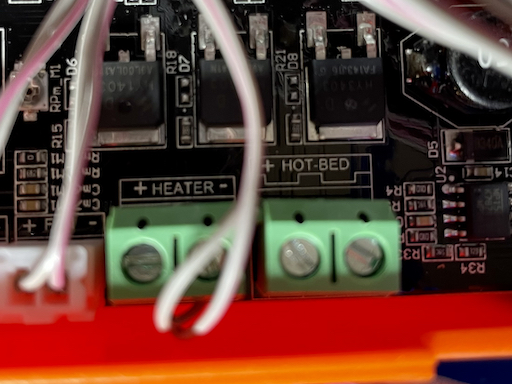

Looking inside the controller box showed a pretty capable circuit board:

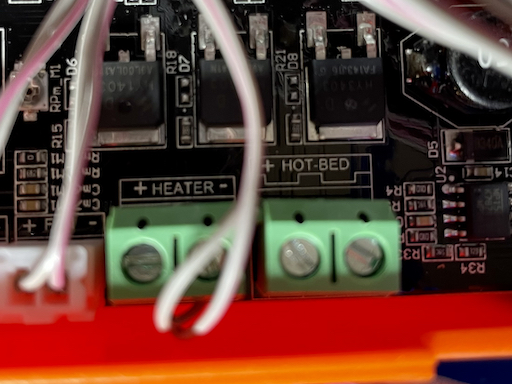

but it was instantly obvious that next to the power terminal for the extruder heater, was a terminal labelled HOT-BED:

Next on my journey of discovery was the communication info that Octoprint was sending/receiving, among which I saw:

Recv: echo:Marlin 1.1.0-RC3

which quickly led me to the Marlin open source project which, crucially, is licensed as GPL. For those who don't know, GPL means that since Labists have given me a binary of Marlin in the printer, they have to give me the source code if I ask for it.

I reached out to Labists and they were happy to supply the source, then I also emailed EasyThreed to ask if I could have their source for the X1 as well (and while I was at it, their X3 printer, which looks a lot like the X1, but ships with a heated bed already as part of the product). They sent me the source with no real issues, so I grabbed the main Marlin repo, checked out the tag for 1.1.0-RC3 and started making branches for the various Labists/EasyThreed source trees I'd acquired. Since their changes were a bit gratuitous in places (random whitespace changes, DOS line endings, tabs, etc) I cleaned them up quite a bit to try and isolate the diffs to code/comment changes.

Since it's all GPL, I've republished their code with my cleanups:

The specific diffs aren't particularly important (although the Labists firmware does have some curious changes, like disabling thermal runaway protection), but by reading up a bit on configuring Marlin, and comparing the differences between the X3 and the X1, it seemed like very little would need to change to enable the bed heater and its temperature sensor (a header for which is also conveniently present on the controller board).

At this point in the investigation I had:

- A controller board with:

- A power terminal for a bed heater

- A header for a bed temperature sensor

- Source for the controller firmware

- Source for an extremely similar printer that has a bed heater

- An external bed heater with power and sensor cables

Not a bad situation to be in!

Diving into the firmware, I found that Marlin keeps most board-specific settings in Configuration.h and specifically, it contains #define TEMP_SENSOR_BED 0. The number that TEMP_SENSOR_BED is defined as, indicates to Marlin what type of temp sensor is attached (with 0 obviously meaning nothing is attached). The X3 has a value of 1 (a 100k thermistor), but I found that I could only get reliable readings with it set to 4 (a 10k thermistor).

Believe it or not, that's actually the only thing that has to change, but I did also change #define BED_MAXTEMP 150 because 150C seems kind of high. This define sets a temperature at which Marlin will shut itself down as a safety measure. As far as I can tell, 50C-70C is a more realistic range for PLA, and even with ABS it seems as though 110C is recommended. I haven't printed ABS yet and don't have any real plans to, so I reduced the safety limit to 100C.

I also modified the build version strings in Default_Version.h so I'd be able to quickly tell in Octoprint if I had successfully uploaded a new firmware.

Next came the challenge of building the firmware. I grabbed the latest Arduino IDE, but it failed to compile Marlin correctly (perhaps because I was using the macOS version of Ardino IDE). Labists helpfully included a Windows build of Arduino IDE 1.0.5 with their firmware source, which was able to build it. Arduino IDE is also GPL, but I haven't republished that yet because I haven't audited the archive for other things that I don't have rights to distribute.

To get the firmware to upload correctly to the X1, I had to set the board type in Arduino IDE to Melzi and select the COM port for its USB interface, except its USB interface wasn't showing up and Windows' Device Manager couldn't find a driver for it. Some Googling for the USB VID/PID of that device led me to the manufacturer of the CH340 chipset and their drivers.

Finally the moment of truth - was I about to destroy a controller board with a bad firmware/driver? I clicked the Upload button, waited for it to complete, attached the controller to my Octoprint machine again and.......

Recv: echo:Marlin 1.1.0-RC3-cmsj

Success! I then waited for Octoprint to start communicating with the printer and monitoring temperatures...

Recv: ok T:24.2 /0.0 B:23.6 /0.0 T0:24.2 /0.0 @:0 B@:0

For those of you who aren't familiar with Octoprint/GCode, the T:24.2 is the temp sensor in the extruder and the B23.6 is the reading from the bed sensor! Another success!

After replacing the X1's 30W power supply with a 60W variant so it could power the motors and the heater, I asked it to heat up to 50C, and after a little while....

Recv: ok T:28.1 /0.0 B:49.9 /50.0 T0:28.1 /0.0 @:0 B@:127

Perfect!

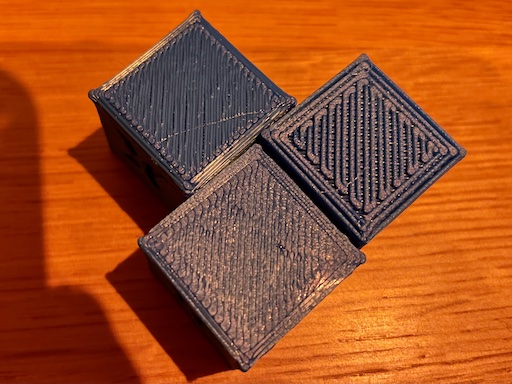

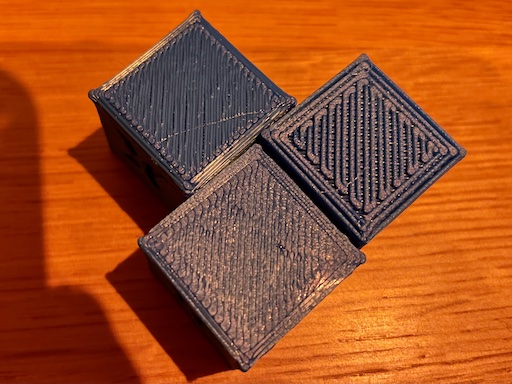

And here is the first test print I did, to make sure everything else was still working:

The cubes on the left are from before the heated bed, where I was having to level the bed closer to the nozzle to get enough adhesion and the cube on the right is the first print with the heated bed. I think the results speak for themselves - much better detail retention. It's not visible, but the "elephant's foot" is gone too!

This has been a super rewarding journey, and I'm incredibly grateful to all the people in the 3D printing community upon whose shoulders I am standing. It's a rare and beautiful thing to find a varied community of products, projects and people, all working on the same goals and producing such high quality hardware and software along the way.

And now the tl;dr version

If you want to do this mod to your X1, here are some things you should know, and some things you will need:

- I am not responsible for your printer. This is a physical and firmware mod, please be careful and think about what you're doing.

- Buy the official hotbed accessory, open its control box and unplug the temperature sensor cable. If for some reason you use a different hotbed, it needs to be 12V, draw no more than 30W, and your temp sensor will need to be something that Marlin can understand via the

TEMP_SENSOR_BED define.

- Buy a 12V 5A barrel plug power supply (I used this one but there are a million options). Use this from now on to power your X1.

- Grab the modified Marlin source from my GitHub repo:

- Either EasyThreed X1 - see the precise changes from EasyThreed's firmware here

- Or Labists X1 - this has more changes than the EasyThreed version, since I pulled back in some of Labists changes, but left thermal runaway protection enabled.

- Install the CH340 USB Serial drivers. There seem to be lots of places to get these from, I used these

- Install Arduino IDE 1.0.5 - still available from the bottom of this page

- In Arduino IDE, open the

Marlin.ino file from the Marlin directory and click the ✔ button on the toolbar, this will compile the source so you can check everything is installed correctly.

- If you plan to print PLA, you might want to increase the

BED_MAXTEMP define to something higher than 100.

- Remove the bed-levelling screws from your X1, swap the original bed for the heated one.

- Open the controller box of your X1, plug the bed's thermal sensor into the controller board in the

TB1 header.

- Wire the bed's power into the green

HOT-BED terminal. For the best results you probably want to unsolder the original power cable from the bed and use something thinner and more flexible (but at the very least you need something longer).

- Reassemble the controller box and run all the wires neatly. I recommend you manually move the bed around to make sure neither the power nor temp sensor wires snag on anything.

- Connect the controller box's USB port to your PC, and in Arduino IDE click the ➡ button to compile and upload the firmware. Wait until it says

Upload complete.

- In theory, you're done! Check the temperature readings in some software that can talk to the printer (Octoprint, Pronterface, etc.), tell it to turn the bed heater on and make sure the temps rise to the level you asked for. I would definitely encourage you to do this while next to the printer, in case something goes dangerously wrong!

Previously I wrote about how I'd tried to create an app, but ultimately failed because I wasn't getting the results I wanted out of the macOS CoreAudio APIs.

Thanks to some excellent input from David Lublin I refactored the code to be able to switch easily between different backend audio APIs, and implemented a replacement for CoreAudio using AVFoundation's AVCaptureSession and it seems to work!

I'd still like to settle back on CoreAudio at some point, but for now I can rest assured that whenever the older versions of SoundSource stop working, I still have a working option.

I recently had the need to migrate someone in my family off an old ISP email account, onto a more modern email account, without simply shutting down the old account. The old address has been given out to people/companies for at least a decade, so it's simply not practical to stop receiving its email.

Initially, I used the ISP's own server-side filtering to forward emails on to the new account and then delete them, however, all of the fantabulous anti-spam technologies that are used these days, conspired to make it unreliable.

So instead, I decided that since I can access IMAP on both accounts, and I have a server at home running all the time, I'd just use some kind of local tool to fetch any emails that show up on the old account and move them to the new one.

After some investigation, I settled on imapsync as the most capable tool for the job. It's ultimately "just" a Perl script, but it's fantastically well maintained by Gilles Lamiral. It's Open Source, but I'm a big fan of supporting FOSS development, so I happily paid the 60€ Gilles asks for.

My strong preference these days is always to run my local services in Docker, and fortunately Gilles publishes an official imapsync Dockule so I set to work in Ansible to orchestrate all of the pieces I needed to get this running.

The first piece was a simple bash script that calls imapsync with all of the necessary command line options:

#!/bin/bash

# This is /usr/local/bin/imapsync-user-isp-fancyplace.sh

/usr/bin/docker run -u root --rm -v/root/.imap-pass-isp.txt:/isp-pass.txt -v/root/.imap-pass-fancyplace.txt:/fancyplace-pass.txt gilleslamiral/imapsync \

imapsync \

--host1 imap.isp.net --port1 993 --user1 olduser@isp.net --passfile1 /isp-pass.txt --ssl1 --sslargs1 SSL_verify_mode=1 \

--host2 imap.fancyplace.com --port2 993 --user2 newuser@fancyplace.com --passfile2 /fancyplace-pass.txt --ssl2 --sslargs2 SSL_verify_mode=1 \

--automap \

--nofoldersizes --nofoldersizesatend \

--delete1 --noexpungeaftereach \

--expunge1

Please test this with the --dry option if you ever want to do this - the --automap option worked incredibly well for me (even translating between languages for folders like "Sent Messages"), but check that for yourself.

What this script will do is start a Docker container and run imapsync within it, which will then check all folders on the old IMAP server and sync any found emails over to the new IMAP server and then delete them from the old server. This is unfortunately necessary because the old ISP in question has a pretty low storage limit and I don't want future email flow to stop because we forgot to go and delete old emails. imapsync appears to be pretty careful about making sure an email has synced correctly before it deletes it from the old server, so I'm not super worried about data loss.

The IMAP passwords are read from files that live in /root/ on my server (with 0400 permissions) and they are mounted through into the container. For the new IMAP account, this is a "per-device" password rather than the main account password, so it won't change, and is easy to revoke.

This isn't a complete setup yet though, because after doing one sync, imapsync will exit and Docker will obey its --rm option and delete the container. What we now need is a regular trigger to run this script and while this used to mean cron, nowadays it could also mean a systemd timer. So, I created a simple systemd service file which gets written to /etc/systemd/system/imapsync-user-isp-fancyplace.service and enabled in systemd:

[Unit]

Description=User IMAP Sync

After=docker.service

Requires=docker.service

[Service]

Type=oneshot

ExecStart=/usr/local/bin/imapsync-user-isp-fancyplace.sh

Restart=no

TimeoutSec=120

and a systemd timer file which gets written to /etc/systemd/system/imapsync-user-isp-fancyplace.timer, and then both enabled and started in systemd:

[Unit]

Description=Trigger User IMAP Sync

[Timer]

Unit=imapsync-user-isp-fancyplace.service

OnUnitActiveSec=10min

OnUnitInactiveSec=10min

Persistent=true

[Install]

WantedBy=timers.target

This will trigger every 10 minutes and start the specified service, which executes the script that starts the Dockule to sync the email. Simple!

And just to show a useful command, you can check when the timer last triggered, and when it will trigger next, like this:

# systemctl list-timers

NEXT LEFT LAST PASSED UNIT ACTIVATES

Mon 2019-05-20 17:38:13 BST 27s left Mon 2019-05-20 17:28:13 BST 9min ago imapsync-user-isp-fancyplace.timer imapsync-user-isp-fancyplace.service

[snip unrelated timers]

9 timers listed.

Pass --all to see loaded but inactive timers, too.

I've just published https://github.com/cmsj/HotMic/ which contains a very good amount of a macOS app I had hoped to complete and sell for a couple of bucks on the Mac App Store.

However, I failed to get it working, primarily because I don't know enough of CoreAudio to get it working, and because I burned almost all of the time I had to write the app, fighting with things that, it turns out, were never going to work.

So, chalk that one up to experience I guess. Maybe the next person who has this idea will find my repo and spend their allotted time getting it to work :)

For the curious, the app's purpose was to be a Play Through mechanism for OS X. What is a Play Through app? It means the app reads audio from one device (e.g. a microphone or a Line In port) and writes it out to a different device (e.g. your normal speakers). This lets you use your Mac as a very limited audio mixer. I want it so the Line Out from my PC can be connected to my iMac - then all of my computer audio comes out of one set of speakers with one keyboard volume control setup.

For the super curious, I'd be happy to get back to working on the app if someone who knows more about Core Audio than I do, wants to get involved!

I'm sure many of us receive regular emails from the same source - by which I mean things like a daily status email from a backup system, or a weekly newsletter from a blogger/journalist we like, etc.

These are a great way of getting notified or kept up to date, but every one of these you receive is also a piece of work you need to do, to keep your Inbox under control. Gmail has a lot of powerful filtering primitives, but as far as I am able to tell, none of them let you manage this kind of email without compromise.

My ideal scenario would be that, for example, my daily backup status email would keep the most recent copy in my Inbox, and automatically archive older ones. Same for newsletters - if I didn't read last week's one, I'm realistically never going to, so once it's more than a couple of weeks stale, just get it out of my Inbox.

Thankfully, Google has an indirect way of making this sort of thing work - Google Apps Script. You can trigger small JavaScript scripts to run every so often, and operate on your data in various Google apps, including Gmail.

So, I quickly wrote this script and it runs every few hours now:

// Configuration data

// Each config should have the following keys:

// * age_min: maps to 'older_than:' in gmail query terms

// * age_max: maps to 'newer_than:' in gmail query terms

// * query: freeform gmail query terms to match against

//

// The age_min/age_max values don't need to exist, given the freeform query value,

// but age_min forces you to think about how frequent the emails are, and age_max

// forces you to not search for every single email tha matches the query

//

// TODO:

// * Add a per-config flag that skips the archiving if there's only one matching thread (so the most recent matching email always stays in Inbox)

var configs = [

{ age_min:"14d", age_max:"90d", query:"subject:(Benedict's Newsletter)" },

{ age_min:"7d", age_max:"30d", query:"from:hello@visualping.io subject:gnubert" },

{ age_min:"1d", age_max:"7d", query:"subject:(Nightly clone to Thunderbay4 Successfully)" },

{ age_min:"1d", age_max:"7d", query:"from:Amazon subject:(Arriving today)" },

];

function processInbox() {

for (var config_key in configs) {

var config = configs[config_key];

Logger.log("Processing query: " + config["query"]);

var threads = GmailApp.search("in:inbox " + config["query"] + " newer_than:" + config["age_max"] + " older_than:" + config["age_min"]);

for (var thread_key in threads) {

var thread = threads[thread_key];

Logger.log(" Archiving: " + thread.getFirstMessageSubject());

thread.markRead();

thread.moveToArchive();

}

}

}

(apologies for the very basic JavaScript - it's not a language I have any real desire to be good at. Don't @ me).